To guarantee a good user experience and meet Service-Level Agreements (SLAs), VoIP providers require methods and metrics to assess the quality of their services accurately. However, this is easier said than done. The final user experience of a call is the result of a complicated mix of technical network factors and the customer’s subjective opinion.

With coding distortion, packet loss, packet delay, jitter, and many other factors all coming together to produce the final audio, service providers must collect meaningful data to get insights on the delivered call quality.

The interplay between each network component and any detrimental effects they produce makes isolating specific performance issues and their root cause a big challenge. For example, performance degrading factors may mask one another or converge to create significantly worse call quality.

Monitoring calls over IP networks requires sophisticated systems to apply advanced models that consider the customer’s subjective experience based on objective measurements of specific technical impairments. This often includes a version of the E-model presented by the International Telecommunication Union (ITU) in recommendation G.107.

Using call duration to understand call quality

In contrast to these technical models, some within the industry utilize Average Call Duration (ACD) as a simplified, more cost-effective method of assessing subjective call quality. The assumption is that longer ACD automatically translates to better user experiences.

While some past research backs up this assumption, those studies were performed on mobile networks at a time when calls were charged based on their duration, and therefore users were incentivized to end calls as quickly as possible. The advent of flat rates changed user behavior and—at least partially—invalidated these early results.

More recent studies also consider factors relevant to modern VoIP, including the transition to IP-based packet switching and the use of new codecs, cast doubt on the ability to effectively apply ACD to assess call quality.

In particular, the paper “Analysis of the Dependency of Call Duration on the Quality of VoIP Calls” demonstrates potential issues for service providers, assuming ACD can act as a definitive sign of call quality.

Authored by Jan Holub, Michael Wallbaum, Noah Smith, and Hakob Avetisyan, the study analyzes Call Detail Records (CDR) for over 16 million live calls made using IP-based telecommunications networks. These CDRs were generated by Qrystal, a non-intrusive commercially available VoIP monitoring system, returning information on both Session Initiation Protocol (SIP) messages and Real-time Transport Protocol (RTP) packets.

Qrystal segments RTP flows into five-second timeslices as recommended by ETSI TR 103639. Each timeslice contains several hundred metrics, used to summarize call quality. This includes basic information about the packet, such as the source and destination IP addresses, the codec used, and information about packet losses. These recorded metrics are entered into the E-model to estimate subjective call quality by estimating a Mean Opinion Score (MOS).

Another metric derived from the CDR is the Critical Minute Ratio (CMR), a KPI defined in ETSI TR 103639 Annex A. The CMR calculates the ratio of the monitoring system which calculates the ratio of “critical” or subpar five-second segments compared to the total number of segments. For example, a CMR of 5% means one of every 20 time slices is affected by critical jitter or loss.

By analyzing CDRs across the extensive database, the authors found challenges in mapping ACD to call quality. This is shown when analyzing ACD and call quality for multiple parameters, including:

- Chosen codec

- Transmission quality

- Estimated user experience

Call duration for different codecs

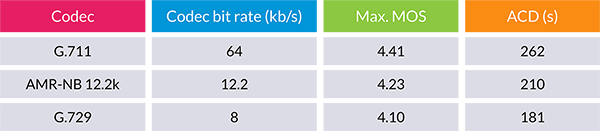

While the raw data set contained calls with 22 different codecs, to simplify the analysis, the paper only considers CDRs using the top three codecs:

- G.711

- G.729

- AMR-NB 12.2k

Each is a narrowband codec utilizing a different compression algorithm and bit rates. The table below shows how ACD varies for each codec:

Only calls with a CMR of 0% were considered to remove network effects and isolate the codec’s effect. The results show a significant improvement in MOS and longer ACD for higher bit-rate codecs. For example, ACD is 40% higher when utilizing the G.711 codec compared to the rather low-quality G.729 codec.

Call duration for estimated user experience

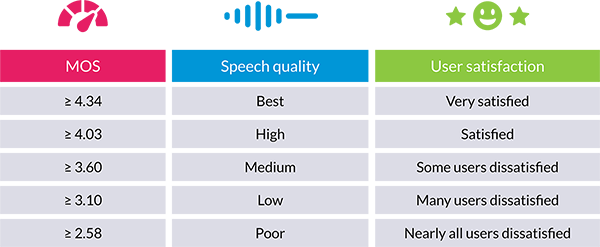

As mentioned earlier, user experience is subjective and service providers go to great lengths to estimate this subjectivity to produce MOS scores. The table below shows how MOS scores

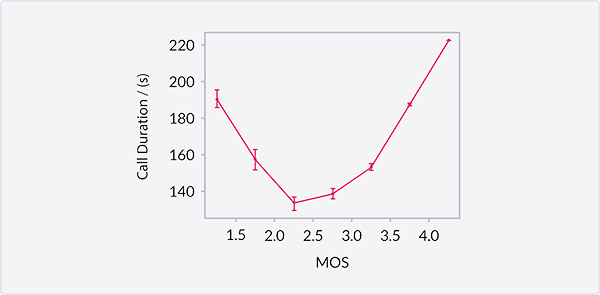

The graph below shows ACD over the average MOS per call:

While ACD drops from a high of 222s when MOS = 4.25, surprisingly, it increases again from a low of 133s (MOS = 2.25) to 190s at the worst MOS recorded (1.25). For lower MOS values, the data shows a rising ACD even with increasingly poor speech quality and greater user dissatisfaction.

Why you can’t assume call duration always translates to call quality

The assumption that ACD equates to call quality and user experience seems to hold for moderate and high-quality calls. However, there are significant discrepancies between the assumed correlation and the data for lower-quality calls. As a result, it is impossible to define a consistent relationship between the two parameters, i.e., increasing call duration does not always mean increasing call quality.

Specifically, the data shows increasing call durations for moderate to poor quality when measured using either the transmission quality or the estimated user experience. For example, from the graph above, an ACD of 190s could correspond to an average MOS score (roughly 3.75) or an abysmal MOS score (<1.5).

There could be multiple reasons behind this surprising result, from people talking slower and constantly repeating themselves instead of terminating the call, to the network using more robust channel coding schemes which introduce higher one-way delays.

Whatever the reasoning, it presents significant problems for VoIP service providers relying on call duration as their main indicator of call quality.

Whether it is circumstances related to poor air interfaces or problematic routing, service providers need to be able to differentiate between the various levels of poor quality calls, and data shows ACD is incapable of making those distinctions.

Service providers instead need to delve deeper and implement monitoring systems that provide metrics capable of delivering consistent, actionable data. For example, with call duration alone, network issues could easily go undetected with analysis based on ACD.

Summary

Research shows call duration can no longer demonstrate the quality of modern VoIP calls. While codec choice and network performance influence call duration, the common assumption that ACD increases consistently with call quality no longer holds. There are many possible reasons for this discrepancy, but the solution is to consider new, more in-depth metrics to calculate call quality and estimate user experience.